Agents Course smolagents Framework

After releasing a bonus unit for Fine-tunign an LLM last week, the Hugging Face crew is back this week with Unit 2. This unit focuses on the smolagents Framework (it’s with a lower case s on purpose hehe). From now on every new unit will feature a new framework such as LlamaIndex and LangGraph.

I must say that this unit feels a bit of a step down in quality compared to the previous unit. Some of the notebooks appear to either be out of date requiring small code modifications and also some of the integrations require paid subscriptions. That’s not a huge problem as the students can still follow through without running the code. However, the biggest issue is the final quiz, but we will come back to that.

The information in this Unit was quite distilled and I found that reading the articles from the Further Reading sections was necessary in order to understand the material.

I like summarising my notes from courses because it’s a great way to cement the learnings. This unit did not include a practical aspect other then encoruaging the learners to go and build their own agents using the learnings. Key topics covered in this unit include:

Why Use Smolagents

Smolagents is a lightweight framework designed to create AI agents that can interact with tools, retrieve information, and solve tasks efficiently. It emphasizes simplicity, modularity, and ease of integration, making it a great choice for developers who want to build AI-powered applications without heavy dependencies.

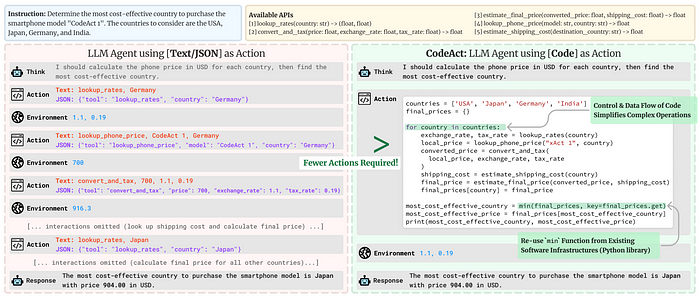

CodeAgents

Code agents are the default agent type in smolagents. They generate Python tool calls to perform actions, achieving action representations that are efficient, expressive, and accurate. They also offer sandboxed execution for security and supports both local and API-based language models, making it adaptable to various development environments.

CodeAgents are AI agents specialized in generating, executing, and debugging code. They use language models to write scripts, run them, and refine outputs based on feedback. These agents are useful for automating coding tasks, software prototyping, and even self-improving AI workflows.

ToolCallingAgents

Tool Calling Agents are the second type of agent available in smolagents. Unlike Code Agents that use Python snippets, these agents use the built-in tool-calling capabilities of LLM providers to generate tool calls as JSON structures. This is the standard approach used by OpenAI, Anthropic, and many other providers.

The key difference to CodeAgents is in how they structure their actions: instead of executable code, they generate JSON objects that specify tool names and arguments. The system then parses these instructions to execute the appropriate tools.

Tools

Tools in smolagentsare external functions or APIs that agents can use to complete tasks. These range from simple utilities like a calculator to complex services like web search, weather forecasts, or database queries.

In smolagents, tools can be defined in two ways:

- Using the

@tooldecorator for simple function-based tools - Creating a subclass of

Toolfor more complex functionality

smolagentscomes with a set of pre-built tools that can be directly injected into an agent. However, the framework also allows importing tools created by other people from the hub, importing an entire Hugging Face Space as a tool or importing a LangChain tool.

Retrieval Agents

Agentic RAG (Retrieval-Augmented Generation) extends traditional RAG systems by combining autonomous agents with dynamic knowledge retrieval.

Basic retrieval can be done with the DuckDuckGo tool which was used in Unit 1 too. For specialised tasks, a custom knowledge base can be created. A tool can be created that queries a vector database of technical documentation or specialised knowledge. Using semantic search, the agent can then find more relevant information for the user’s needs then the standard retrieval tool. These techniques like vector search and embeddings are used to improve response accuracy, making them essential for knowledge-based AI applications

Multi-Agent Systems

Multi-Agent Systems involve multiple AI agents working together, each with a specialized role. These agents communicate, collaborate, and delegate tasks, enabling more complex problem-solving scenarios, such as automating workflows, managing large datasets, or simulating real-world decision-making.

A typical setup might include:

- A Manager Agent for task delegation

- A Code Interpreter Agent for code execution

- A Web Search Agent for information retrieval

Vision and Browser Agents

Vision Agents process and analyze images, enabling AI to interpret visual data, recognize objects, and generate insights. smolagents provides built-in support for vision-language models (VLMs), enabling agents to process and interpret images effectively.

Browser Agents automate web interactions, such as scraping data, navigating pages, and filling forms, allowing AI to gather information from online sources dynamically.

Final Quiz

The Final Quiz was a doozie. It seems that an LLM is used to grade the code and if the code used doesn’t exactly match the template code then it treats it as a wrong answer. I won’t actually put the answers here but I will mention the main issues/tricks to be aware of. You can find the answers that worked for me in my github. This required quite a lot of back and forth.

Question 1 Create a CodeAgent with DuckDuckGo search capability

The answer can be found in the code_agents.ipynb notebook from Building Agents that Use Code section. However, the main issue here is that the answer is looking for the student to specifically mention the model_id=”Qwen/Qwen2.5-Coder-32B-Instruct”. This was a bit confusing because that is also the default model, so technically the code would work without specifying the model_id.

Question 2 Set Up a Multi-Agent System with Manager and Web Search Agents

The answer can be found in the multiagent_notebook notebook from Multi-Agents section. This question is more difficult as it asks the student to first create the web_agent and then to create the manager_agent which manages the web_agent. Initially I used GoogleSearchTool as the search tool but it seems that the solution is looking for 'DuckDuckGo' tool specifically.

I actually had to retry this question several times because it kept getting marked as wrong for lots of silly reasons including the fact that I used a wrong name or wrong description. It seems that any deviation from the hardcoded solution invalidates the answer even though its functionally correct. The worst error I got was: “Although the additional imports are the same, the order differs, which is not a functional issue but may indicate lack of attention to detail as per the reference solution.” I think Hugging Face really need to review these questions.

Question 3 Configure Agent Security Settings

This question is reaeeeally badly written. It requires the student to import E2BSandbox and use it as a paramater. However, this sandbox was never mentioned in the actual course. I had to go digging all over the smolagents documentation for it. For some reason it also wants numpy as additional_authorized_imports. I literally had to figure this from the errors that kept popping up.

Question 4 Implement a Tool-Calling Agent

The answer can be found in the tool_calling_agents notebook from the Writing actions as code snippets or JSON blobs section. This is a simple question that can be taken from the notebook but make sure you use a name and a description and the max_steps is 5 for some reason.

Question 5 Set Up Model Integration

This question was another doozy and really gave little indication as to what actually needs to be done. I figured out the answer for this one from a combination of reading the discord server for the course and also the error from the marker which said that it wanted claude-3-sonnet as model. Who knows why.